The price of your product is wrong.

It’s a harsh reality you’re better off accepting sooner rather than later.

Would you like to sponsor my newsletter? → Send an inquiry ←

Everyone gets pricing wrong. Even OpenAI can’t be spared, with all their Artificial Intelligence.

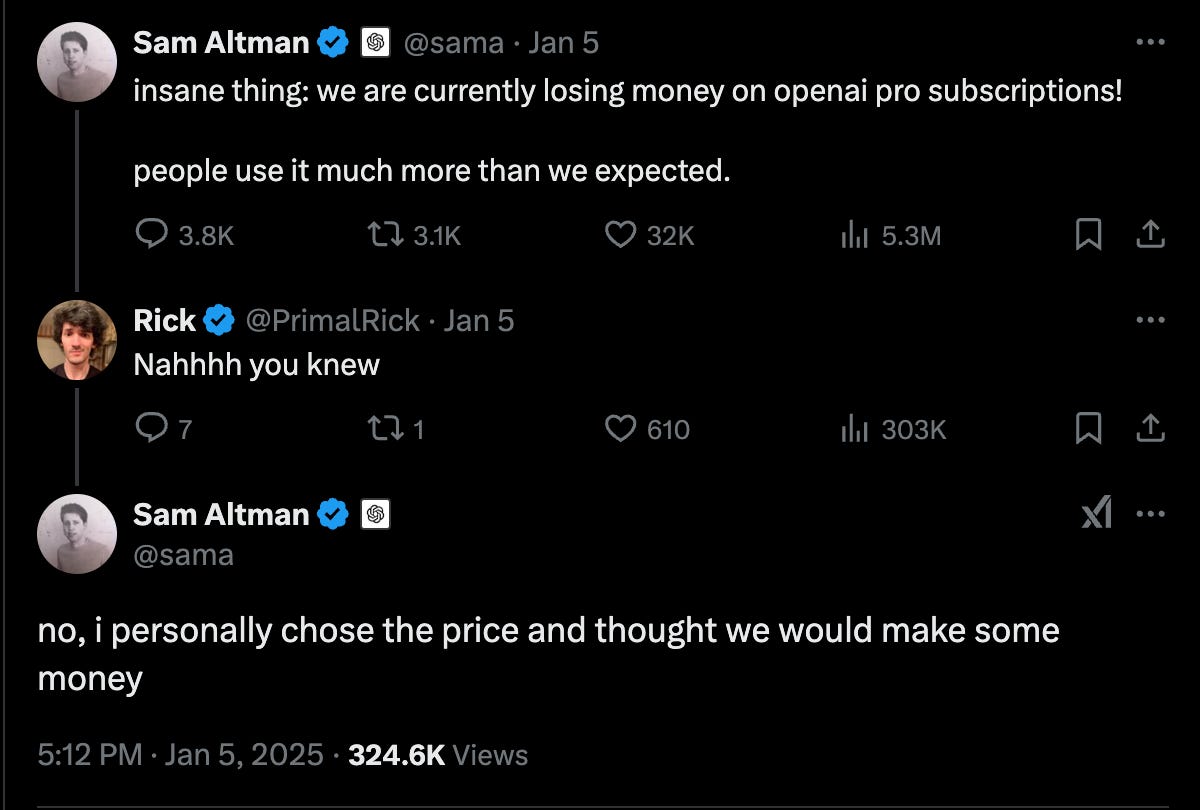

Case in point: Sam Altman recently posted about their $200/month ChatGPT Pro plan being priced incorrectly (it’s not profitable), commenting: “[But] I personally chose the price and thought we would make some money.’

HA! The infamous last words of every bad pricing decision. Am I right, or am I right??

Three modes of failure in pricing decisions

Mode 1: HiPPO (Highest Paid Person's Opinion) makes the pricing decisions (cough, Sam Altman, cough). After all, they need to feel powerful. Of course, they pray and hope to be right, and occasionally, they might be. But 99% of the time, they swing and miss. I don’t blame these poor HiPPOs - our corporate environment positions executives as all-knowing, future-seeing, always-right mythical beings who supposedly have all the answers. After all, they are just trying to play the part - send your condolences.

Mode 2: Over-reliance on qualitative data. Gasp! Am I really saying that being data-driven here can lead to failure? Yes, yes I am.

Asking people how much they’re willing to pay for your product isn’t a great predictor of what they’ll actually pay. This is because people are much quicker to spend theoretical dollars than real dollars. If a friendly person/survey asks them, “Hey, would you pay $100 for this product?” they don’t want to seem cheap! And maybe in certain situations they truly would… so “Sure,” they say, “I’d even pay $120.” But when the time comes to actually pay? Suddenly, it’s a very different story and even $80 is a non-starter.

One main reason for this is that everyone (me, you, and the potential customer) tends to ignore the cost incurred by the friction it takes to get to the value. The equation you need to remember is:

Price < (Perceived Value – Friction)

That last variable is crucial: The price point has to be lower than the perceived value… minus friction! The more friction that has to be overcome to get to the perceived value, the lower the price needs to be. Surveys, focus groups, and even early A/B tests generally assume perfect conditions: high perceived value and zero friction. That’s never the reality. So, when you roll out a price based on theoretical willingness-to-pay, expect reality to hit hard.

This isn’t to say you shouldn’t use data, but quantitative data > qualitative data in this context. That said, qualitative insights are valuable as input before launching tests to gather quantitative data.

Mode 3: Going to market with a singular price point, as if there needs to be no further discussion. I know all of us have been part of a ‘big launch’ with a carefully determined price point (maybe your company even shelled out $500,000 to get Simon-Kucher involved) and then BAM—major fail. Conversion rates are low, retention is in the toilet, and oops, no contingency planning. So, the next 6 months are consumed with some rollback or ‘testing-out’ activities. Here is a hard truth: If you’re settled on just a singular price point, it’s almost guaranteed to be wrong (no matter how you came up with it).

So. If you can’t trust your CEO’s guess, you can’t rely on research, and even $500K Simon-Kucher crystal ball doesn’t give you the right answer… what are you supposed to do?

P.S. Side note on Simon-Kucher: They are a huge and very (very) expensive firm that is quite popular among executives seeking "solid pricing recommendations." And listen, these folks are incredible at research—the best I’ve ever seen, hands down. But I’ve also witnessed countless instances where their recommendations nearly kill the companies that implement them.

Why?

A. They focus on short-term revenue, often at the expense of long-term growth loops. If your business relies on virality, beware.

B. They don’t stick around to operationalize or optimize the new price points, leaving teams unprepared to handle the fallout.

For a truly complete answer, you’ll need to understand your monetization model and you should take my Reforge monetization & pricing course. But for today, let me walk you through how to approach changing your prices—whether that’s updating existing plans or rolling out new offerings.

How to get the price right

Start off by accepting the truth that your new price will almost definitely be wrong. This is painful (especially if your CEO picked it), but the faster you can admit this, the faster you can get started on finding the optimal price point. Here’s what I recommend:

1. Pick metrics that measure impact on the entire growth ecosystem

It’s not just about how many people buy the plan. It is about how customers retain, expand, and how much they will cost. Metrics should be:

Conversion rate: How many people buy at each price point?

Churn rate: How many renew or buy again?

Retention/Engagement rate: How many people engage and retain?

Expansion rate: How many people upgrade to a higher plan?

Cost impact: For products with high variable costs (looking at you, AI enthusiasts), how does usage at each price affect your margins?

Conversion is easy and quick to measure. The rest… not so much. Every single pricing rollout will require at least a 3 or 6-month (or longer) measurement time frame. This doesn’t mean you need to collect new observations the entire time, but you will need to monitor cohorts over that period to understand their behavior.

2. Run a price sensitivity tests

One of the most powerful tests you should run *every few years* is a simple price sensitivity test. As the most simple option, you can test three price variations: Your current price, and then a lower and higher version. So say your price is $25, then test $19 and $29. This will allow you to begin plotting a rudimentary elasticity curve of your conversion and churn rates that can help forecast additional price points.

Keep reading with a 7-day free trial

Subscribe to Elena's Growth Scoop to keep reading this post and get 7 days of free access to the full post archives.